ASIA ELECTRONICS INDUSTRYYOUR WINDOW TO SMART MANUFACTURING

NVIDIA AI Yields Breakthrough in New MLPerf Result

NVIDIA Corporation has been relentlessly working to build AI factories. Thus, it has been speeding the training and deployment of next-generation AI applications that use the latest advancements in training and inference.

In a recent press briefing, NVIDIA announced its AI platform delivered the highest performance at scale on every benchmark in the latest round of MLPerf Training. The NVIDIA AI platform also powered every result submitted on the benchmark’s toughest large language model (LLM)-focused test, which is the Llama 3.1 405B pretraining.

Dave Salvator, Director of Accelerated Computing Products at NVIDIA said the latest round of MLPerf Training is the 12th since the benchmark’s introduction in 2018. Most importantly, it was in this round the NVIDIA platform was the only one that submitted results on every MLperf Training v5.0 benchmark.

Salvator said, this underscores the NVIDIA platform’s exceptional performance and versatility across a wide array of AI workloads, spanning LLMs, recommendation systems, multimodal LLMs, object detection, and graph neural networks.

Advancements in Blackwell Architecture

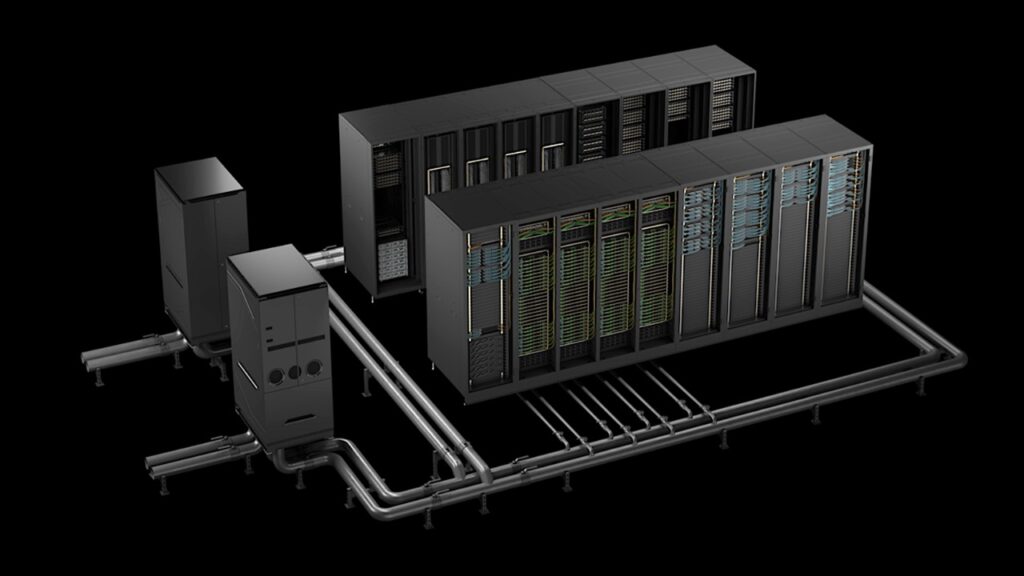

The NVIDIA submissions to the MLPerf Training benchmark used two AI supercomputers powered by the NVIDIA Blackwell platform, namely the Tyche, built using NVIDIA GB200 NVL72 rack-scale systems, and the Nx, based on NVIDIA DGX B200 systems.

NVIDIA collaborated with CoreWeave and IBME to submit GB200 NVL72 results using a total of 2,496 Blackwell GPUs and 1,248 NVIDIA Grace CPUs.

On the new Llama 3.1 405B pretraining benchmark, Blackwell delivered 2.2 times greater performance compared with its previous-generation architecture at the same scale. Meanwhile, on the Llam 2 70B LoRA fine-tuning benchmark, the NVIDIA DGX B200 systems, powered by eight Blackwell GPUs, delivered 2.5x more performance compared with a submission using the same number of GPUs in the prior round.

These performance leaps highlight advancements in the Blackwell architecture. These include high-density liquid-cooled racks, 13.4TB of coherent memory per rack, fifth-generation NVIDIA NVLink and NVIDIA NVLink Switch interconnect technologies for scale-up and NVIDIA Quantum-2 InfiniBand networking for scale-out. Plus, innovations in the NVIDIA NeMo Framework software stack raise the bar for next-generation multimodal LLM training, critical for bringing agentic AI applications to market.

Salvator said these agentic AI-powered applications will one day run in AI factories, the engines of the agentic AI economy. Thus, may be used to almost every industries.

Gold Standard in AI

The MLPerf Training benchmark suite measures how fast systems can train models to a target quality metric. Specifically, they provide unbiased evaluations of training and inference performance for hardware, software, and services.

Considered as the gold standard in AI benchmarking, MLCommons, a consortium of AI leaders from academia, research labs, and industry, developed the MLPerf benchmarks.

The benchmarking are all conducted under prescribed conditions. To stay on the cutting edge of industry trends, MLPerf continues to evolve, holding new tests at regular intervals and adding new workloads that represent the state of the art in AI.

05 June 2025