ASIA ELECTRONICS INDUSTRYYOUR WINDOW TO SMART MANUFACTURING

NVIDIA Boosts Grit in AI, Eyes New Chip Yearly

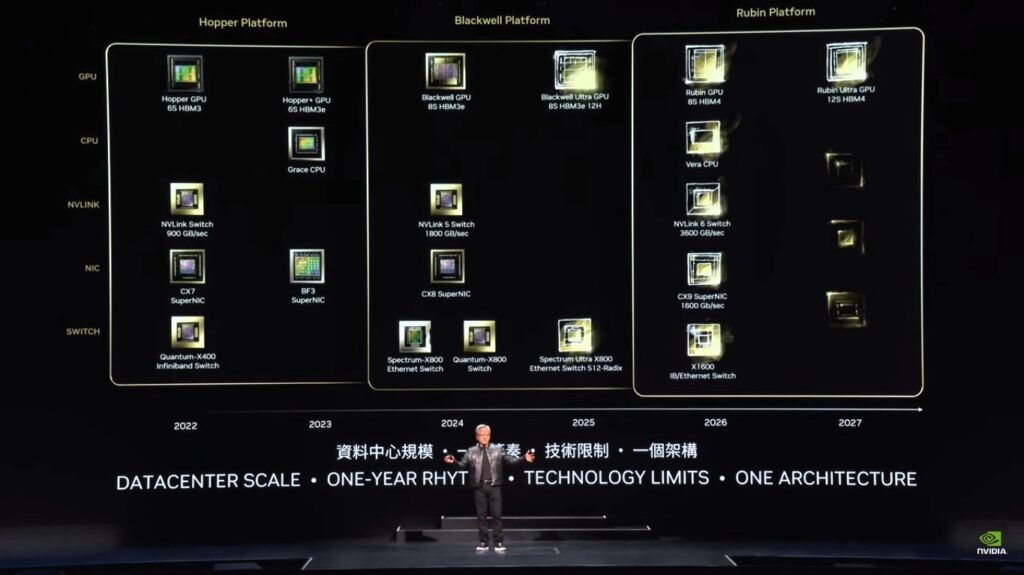

At the keynote stage ahead of COMPUTEX 2024 in Taipei, NVIDIA founder and CEO Jensen Huang emphasized the company’s resolve in the field of artificial intelligence (AI). This as Huang confirmed the company’s roadmap for GPUs will take a “one-year rhythm”. Moreover, the company will also a new Arm-based CPU, pushing technology to its limits.

“Our company has a one-year rhythm. Our basic philosophy is very simple: build the entire data center scale, disaggregate and sell to you parts on a one-year rhythm, and push everything to technology limits,” Huang explained.

AI Accelerators

Barely three months after introducing its breakthrough Blackwell GPU architecture last March, Huang said a new Blackwell Ultra GPU will arrive in 2025. Essentially, the Blackwell platform followed the Hopper Platform. The latter got produced the 65 HBM3-variant Hopper GPU in 2022 and the Hopper+ GPU in 2023.

Moreover, Huang also revealed for the first time Blackwell’s successor, the Rubin platform. The upcoming platform will have the 8S HBM4 Rubin GPU in 2026 while the 12S HBM4 Rubin Ultra GPU will come in 2027.

In addition, the company will also introduce the Arm-based Vera platform CPU, three years after it introduced the Grace CPU. Also, Huang announced the advanced networking with NVLink 6, CX9 SuperNIC and the X1600 converged InfiniBand/Ethernet switch.

Huang spoke to an audience of more than 6,500 industry leaders, press, entrepreneurs, gamers, creators and AI enthusiasts at the glass-domed National Taiwan University Sports Center in Taipei.

Encompasses Many Industries

During the keynote, which nearly lasted two hours, Huang underscored the role generative AI will play across many industries and how it will become the catalyst for innovation and growth.

“Generative AI is reshaping industries and opening new opportunities for innovation and growth,” said Huang. In addition, Huang said, “Today, we’re at the cusp of a major shift in computing…The intersection of AI and accelerated computing is set to redefine the future.”

Huang’s keynote centered on the readiness of NVIDIA’s accelerated platforms whether through AI PCs and consumer devices featuring a host of NVIDIA RTX-powered capabilities or enterprises building and deploying AI factories with NVIDIA’s full-stack computing platform.

“The future of computing is accelerated,” Huang said. “With our innovations in AI and accelerated computing, we’re pushing the boundaries of what’s possible and driving the next wave of technological advancement.”

NVIDIA is driving down the cost of turning data into intelligence, Huang explained as he began his talk.

“Accelerated computing is sustainable computing,” he emphasized, outlining how the combination of GPUs and CPUs can deliver up to a 100x speedup while only increasing power consumption by a factor of three, achieving 25x more performance per Watt over CPUs alone.

Propels Gen AI Applications

Huang also announced the NVIDIA inference microservices (NIM). Here, the world’s 28 million developers can now easily create generative AI applications. Moreover, NIM can provide models as optimized containers — can be deployed on clouds, data centers or workstations.

NIM also enables enterprises to maximize their infrastructure investments. For example, running Meta Llama 3-8B in a NIM produces up to 3x more generative AI tokens on accelerated infrastructure than without NIM.

Nearly 200 technology partners — including Cadence, Cloudera, Cohesity, DataStax, NetApp, Scale AI, and Synopsys — are integrating NIM into their platforms. Thus, speeding generative AI deployments for domain-specific applications, such as copilots, code assistants, digital human avatars and more.

02 June 2024