ASIA ELECTRONICS INDUSTRYYOUR WINDOW TO SMART MANUFACTURING

New NVIDIA Rubin GPU to Power AI Systems

NVIDIA has announced NVIDIA Rubin CPX, a new class of GPU purpose-built for massive-context processing. This enables AI systems to handle million-token software coding and generative video with groundbreaking speed and efficiency.

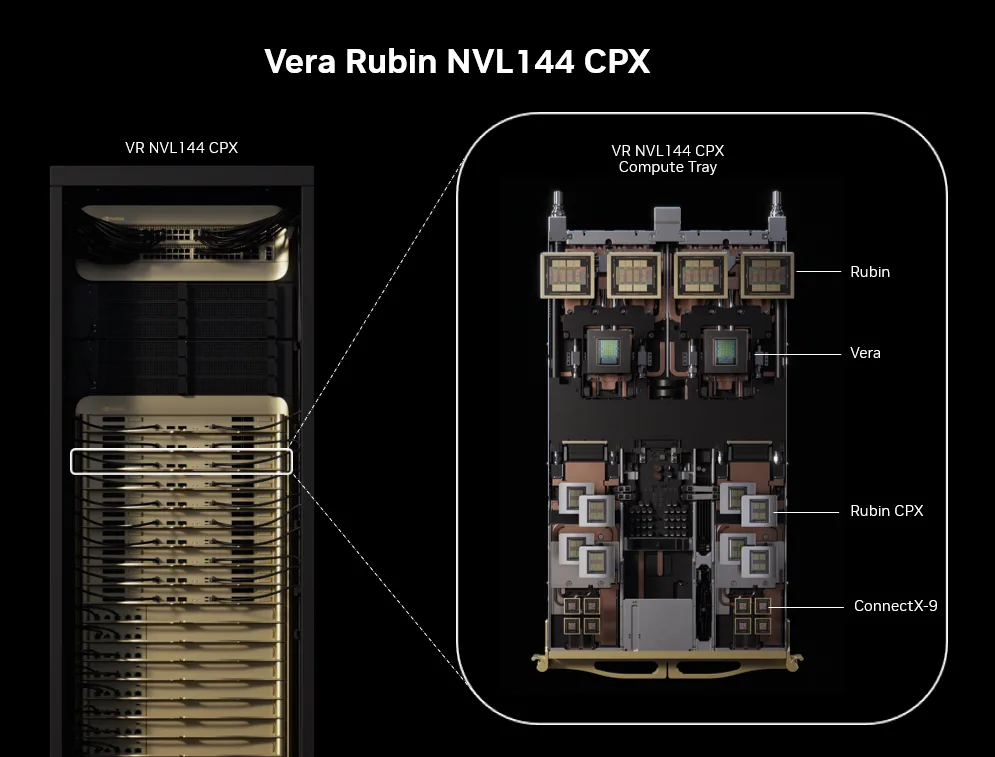

Rubin CPX works hand in hand with NVIDIA Vera CPUs and Rubin GPUs inside the new NVIDIA Vera Rubin NVL144 CPX platform. This integrated NVIDIA MGX system packs 8 exaflops of AI compute to provide 7.5x more AI performance than NVIDIA GB300 NVL72 systems, as well as 100TB of fast memory and 1.7 petabytes per second of memory bandwidth in a single rack. A dedicated Rubin CPX compute tray will also be offered for customers looking to reuse existing Vera Rubin NVL144 systems.

“The Vera Rubin platform will mark another leap in the frontier of AI computing — introducing both the next-generation Rubin GPU and a new category of processors called CPX,” said Jensen Huang, founder and CEO of NVIDIA. “Just as RTX revolutionized graphics and physical AI, Rubin CPX is the first CUDA GPU purpose-built for massive-context AI, where models reason across millions of tokens of knowledge at once.”

Delivers High Performance, Energy Efficiency

NVIDIA Rubin CPX enables the highest performance and token revenue for long-context processing. Accordingly, this is far beyond what today’s systems were designed to handle. This transforms AI coding assistants from simple code-generation tools into sophisticated systems that can comprehend and optimize large-scale software projects.

To process video, AI models can take up to 1 million tokens for an hour of content, pushing the limits of traditional GPU compute. Purpose-built for this, Rubin CPX integrates video decoder and encoders, as well as long-context inference processing, in a single chip for unprecedented capabilities in long-format applications such as video search and high-quality generative video.

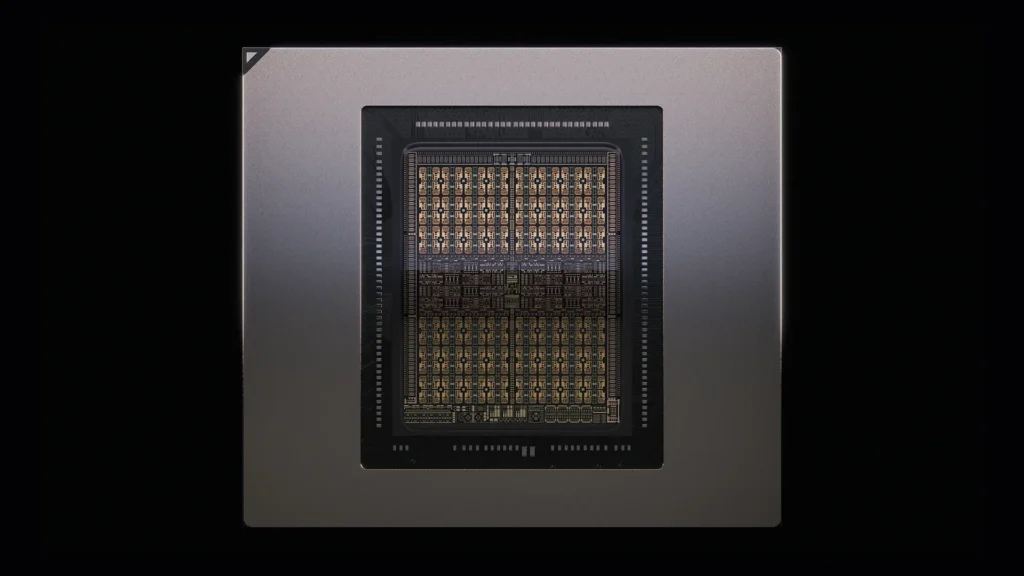

Built on the NVIDIA Rubin architecture, the Rubin CPX GPU uses a cost‑efficient, monolithic die design packed with powerful NVFP4 computing resources and is optimized to deliver extremely high performance and energy efficiency for AI inference tasks.

Advancements Offered by Rubin CPX

Rubin CPX delivers up to 30 petaflops of compute with NVFP4 precision for the highest performance and accuracy. It features 128GB of cost-efficient GDDR7 memory to accelerate the most demanding context-based workloads. In addition, it delivers 3x faster attention capabilities compared with NVIDIA GB300 NVL72 systems — boosting an AI model’s ability to process longer context sequences without a drop in speed.

Rubin CPX is offered in multiple configurations, including the Vera Rubin NVL144 CPX, that can be combined with the NVIDIA Quantum‑X800 InfiniBand scale-out compute fabric or the NVIDIA Spectrum-X™ Ethernet networking platform with NVIDIA Spectrum-XGS Ethernet technology and NVIDIA ConnectX®-9 SuperNICs™. Vera Rubin NVL144 CPX enables companies to monetize at an unprecedented scale, with $5 billion in token revenue for every $100 million invested.

10 September 2025