ASIA ELECTRONICS INDUSTRYYOUR WINDOW TO SMART MANUFACTURING

Cutting-Edge LiDAR by Toshiba Boosts Digital Twin

Toshiba Corporation has announced world-first*1 advances in Light Detection and Ranging (LiDAR) technologies. Specifically, Toshiba created LiDAR technologies that secure an unmatched accuracy of 99.9%*2 in object tracking and object recognition of 98.9% with data acquired by the LiDAR alone. Also, they significantly improve the environmental robustness and the potential for using LiDAR in many different applications.

Mainly, LiDAR uses a laser to measure distances to objects. It has long been a mainstay of advanced driving and autonomous driving systems. More recently, in combination with cameras, it has been used to create digital twins. Specifically, these virtual replicas of real-world objects and systems can be used to model performance to identify problems and improve operations in many industries.

Mainly, digital twins differ from typical simulations in that they can reflect real-world changes in real time. Until now, simulations could not capture machinery wear and tear as it happened. However, today’s sensors and AI can collect and analyze vast amounts of data from operating production lines and equipment. This enables accurate reproduction of real-world events in virtual form.

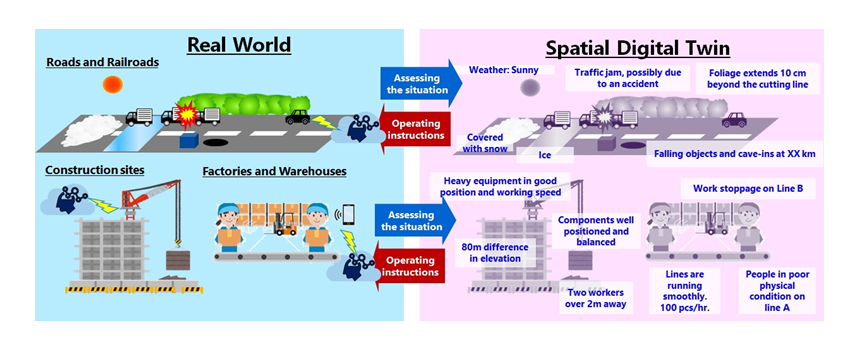

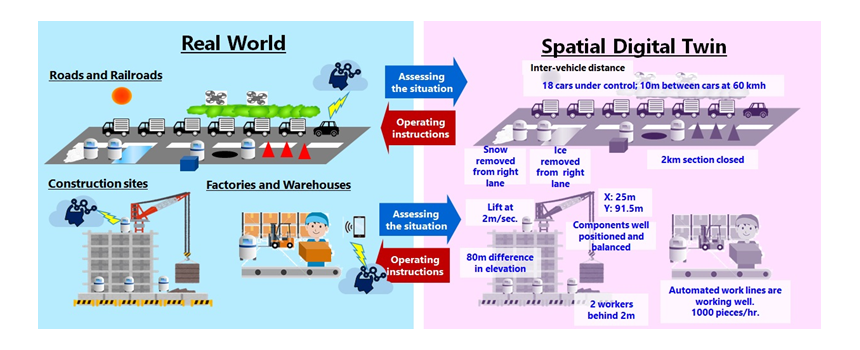

Moreover, beyond equipment digital twins that model specific processes, there is now an emerging need for spatial digital twins. Specifically, it is the capability to reproduce entire factories or urban areas (Fig.1). Creating these advanced digital twins will support the automation of all kinds of mobility equipment, and the overall optimization of factories and logistics warehouses. In cities, they will mitigate problems like those caused by accidents and traffic congestion (Fig.2).

Additionally, the creation of spatial digital twins requires wide-area, high-precision space-sensing technologies that can recognize and track objects, even in bad weather. LiDAR is seen as the technology that meets these requirements. Since precise recognition and tracking with the data acquired by a LiDAR alone is difficult, it is often used with a camera. Then, the 3D data from the LiDAR is combined with the camera’s 2D data. However, complete elimination of spatial misalignment between the data is difficult, and accuracy is also degraded by bad weather, such as rain or fog, or when there are blind spots due to the characteristics of the installation location.

Accordingly, Toshiba has advanced the realization of high-precision spatial digital twins with three world-first LiDAR technologies.

1. 2D/3D Fusion AI

Precise recognition and tracking of objects using only data acquired by LiDAR.

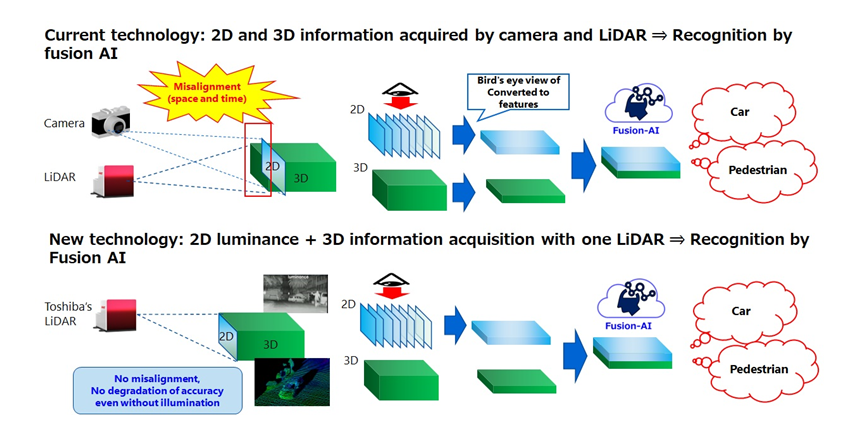

Aware that LiDAR acquires both 2D data, from luminance, and 3D data, Toshiba fused the data and applied AI to object recognition (Fig.3, bottom). As all the data are captured from the same LiDAR pixels at the same time, there is no need for angle-of-view or frame-rate adjustment. This is required when a camera is used with a LiDAR (Fig.3, top). Specifically, the AI eliminates accuracy degradation resulting from misalignment correction errors and vibration. It recognized objects, including vehicles and people with 98.9% accuracy, the world’s highest. Also, it tracked them with 99.9% accuracy without a camera, even at night, without lighting (Fig.4).

2. Rain/Fog Removal Algorithm

Minimizing rain and fog artifacts that degrade LiDAR measurement accuracy.

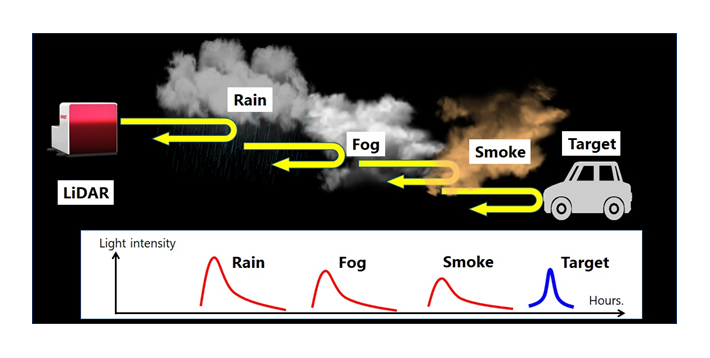

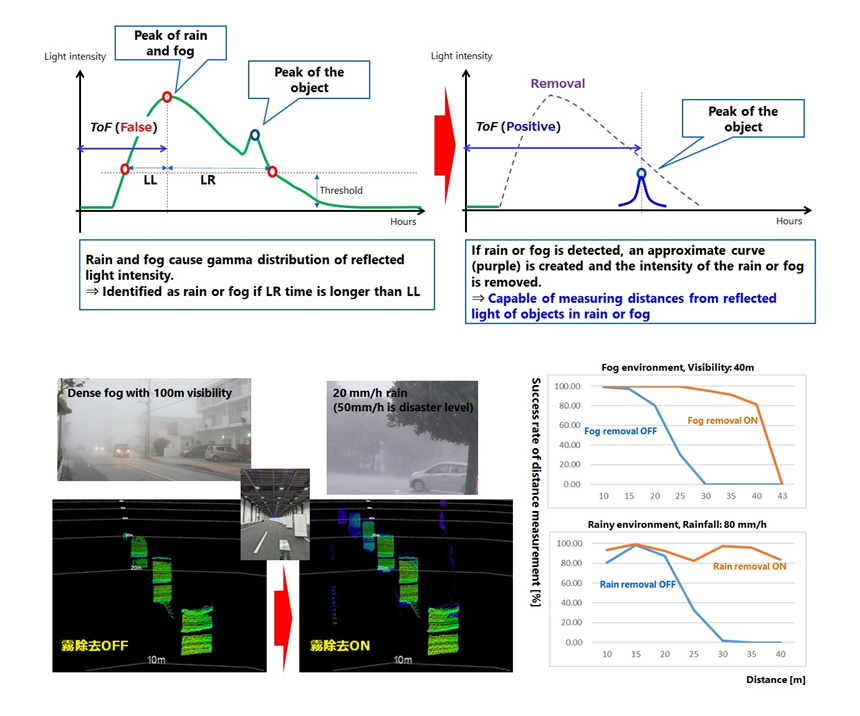

LiDAR manufacturers incorporate a multi-echo function into their products, designed to detect only the reflected light from objects. However, in rain or fog, extracting faint reflected light signals from objects is difficult, and raises accuracy issues.

Toshiba’s solution is an algorithm that uses an A/D converter to convert analog data from light reflected by objects in rain and fog into digital values of reflected light intensity. The algorithm uses the characteristic waveform of reflected light from rain or fog to determine the weather conditions. Also, it removes any entire waveform determined to be rain or fog (Fig.5). Testing found that the algorithm doubled detectable distance, from 20m to 40m, in heavy rain of 80mm an hour, and from 17m to 35m in fog with a visibility of 40m (Fig.6).

3. Variable Measurement Range Technology

Changing the range determined by distance and angle of view of the LiDAR.

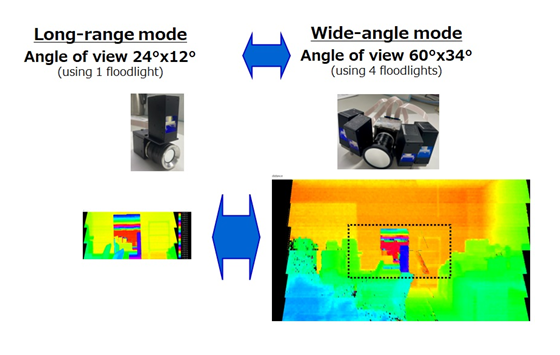

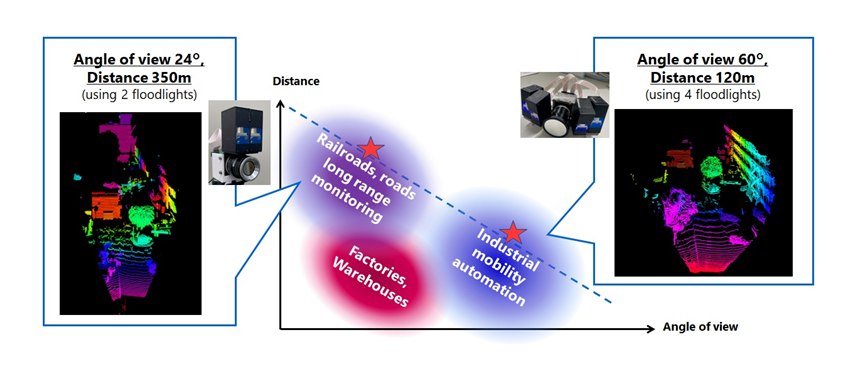

The new LiDAR technology that Toshiba unveiled in March 2022 used two projectors, downsized to 71cm3 *3, to increase ranging distance 1.5 times and to improve wide-angle imaging while meeting eye-safe*4 standards. Now, the company changed the number of projectors and the configuration of the lens (Fig.7, top), further extending the range and widening the angle of view six times.

In tests, a range of 120m was achieved with a horizontal viewing angle of 60°(H) and a vertical angle of 34° (V) (Fig.7). A range of 350m, the world’s longest measured distance*5, was achieved with a viewing angle of 24°(H) × 12°(V) (Fig.8). This advancement points the way to spatial digital twins for monitoring infrastructure, such as roads and railroads, which require long-distance measurement, and factory and warehouse operation of automated guided vehicles that require wide-angle performance.

Generally, the three new technologies greatly improve the potential of Toshiba’s LiDAR. Moreover, they will contribute to the creation of spatial digital twins.

Toshiba will continue to research and develop environmentally robust LiDAR, and aims to commercialize solid-state products*6 in FY2025. The company will contribute to building a safe and secure society with resilient infrastructure by promoting wide ranging LiDAR applications, including mobility automation, infrastructure monitoring, and spatial digital twins.

*1: Toshiba research as of September 2023

*2: Results demonstrated for cars driven 50m to 115m away from the LiDAR and pedestrians walking 80m to 110m away from the LiDAR.

*3: Press release, March 2022

*4: Maintain laser light intensity that does not cause eye damage. Safety standards for lasers are determined by the International Electrotechnical Commission and other organizations. Laser safety standards are classified into seven classes according to the safety level based on the output of the laser equipment, wavelength of the laser beam, etc. For example, Class 1, the highest safety level, is defined as a level that is safe for the eyes no matter what optics, lens or telescope, are used to focus the light, and is also called as “eye-safe”.

*5: Toshiba testing, as of August 25th, 2023. Measured volume, image quality (resolution), and range of the measuring device at an angle of view of 24° x 12°.

*6: Toshiba has developed a solid-state light-receiving unit. A MEMS mirror with a wide scanning range must be developed separately for the light-emitting unit.