ASIA ELECTRONICS INDUSTRYYOUR WINDOW TO SMART MANUFACTURING

Techman Robot Shows Robotic Innovations Using Mixed Reality

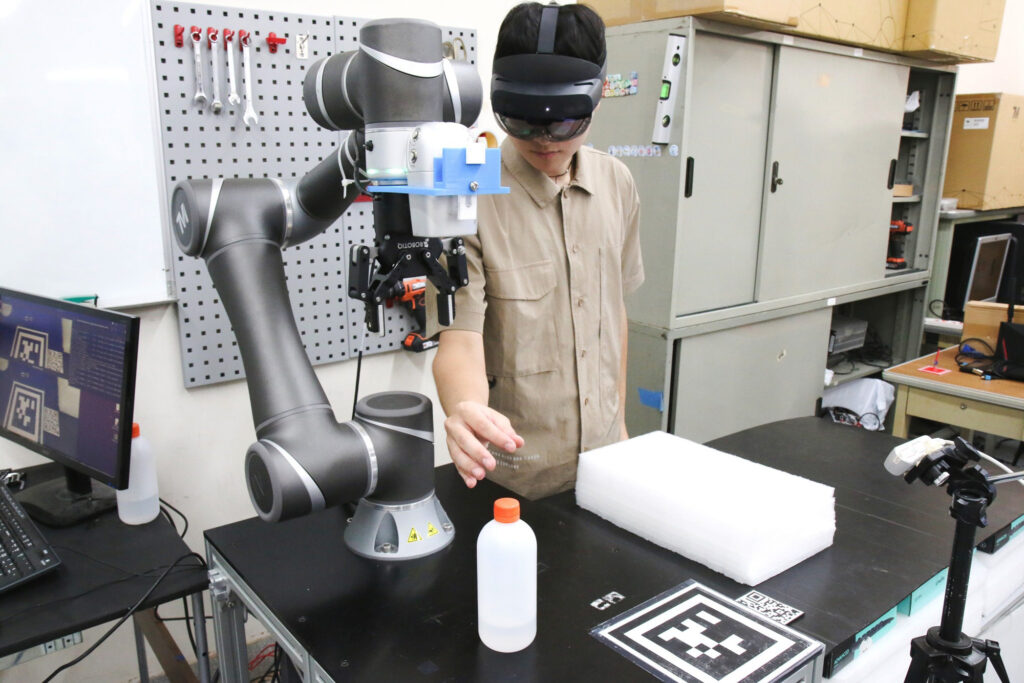

Techman Robot, in collaboration with the Intelligent System Control Integration Laboratory (ISCI Lab) from NYCU unveiled a groundbreaking demonstration at ROSCon 2023, held from October 18th to 20th in New Orleans, Louisiana.

The highlight of the demonstration was the MR-based Robot Task Assignment and Programming system. Particularly, the system showcases the potential of Mixed Reality (MR) technology in the realm of robotics.

Cutting-edge Robotics Solution

The new MR-based robot system features gesture recognition, which uses MR glasses to track and interpret hand movements for virtual path planning. Users can intuitively plan robot movements with gesture-based path planning. Meanwhile, task simulation enables users to test tasks virtually before execution. Once the path is set, it is sent to the TM AI Cobot‘s controller for precise execution. This innovation is set to transform robotics and automation. Mainly, this application revolutionizes collaborative robot (cobot) programming. Its accessibility and user-friendliness open doors for non-professionals to deploy cobot in real work settings.

The system also highlights the TM AI Cobot equipped with built-in vision system and AI technology. Specifically, this powerful combination enables the cobot to perform tasks with exceptional precision and efficiency. By integrating MR technology, it enhances human-robot interaction, boosting efficiency across industries.

In recent years, the robot operating system (ROS) has been widely adopted in the academic field. Techman Robot’s training center not only offers the ROS Driver but also provides comprehensive teaching resources and technical support for users. Moreover, Techman Robot’s market-leading built-in vision function now seamlessly supports direct image data acquisition within the ROS2 system. This eliminates the need for users to grapple with the complexities of integrating vision capabilities independently. Accordingly, this allows them to focus more on advancing their research projects.