ASIA ELECTRONICS INDUSTRYYOUR WINDOW TO SMART MANUFACTURING

Panasonic HD's AI Tool Deals with Weather Removal

Panasonic Holdings Co., Ltd. (Panasonic HD) has jointly developed an adverse weather removal AI with researchers from the University of California, Berkeley (UC Berkeley), Nanjing University, and Peking University. Specifically, the technology improves image recognition accuracy by removing rain, snow, fog, and other elements from images that can significantly reduce image recognition accuracy. Mainly, this method demonstrated image restoration performance that improved recognition accuracy over conventional methods. At the same time, it saved over 72% of parameters and 39% of inference time in image recognition and segmentation tasks for multiple adverse weather images.

Outdoor image recognition AI applications are becoming increasingly common in fields such as mobility and infrastructure. However, rain, snow and fog can drastically alter the appearance of objects in images captured outdoors. This significantly reduces image recognition accuracy. In recent years, the task of erasing rain, snow fog and other elements from images, known as “weather removal,” has gained steam. Accordingly, it enables practical AI for use in all weather conditions. Until now, methods have been proposed to prepare different models depending on the type of weather, or to integrate models so that they can be used in all weather conditions. However, the large number of calculations required had proven a bottleneck for these methods.

By representing different weather parameters as weights, the joint development team created a technology that can reliably remove the influence of adverse weather. Specifically, the group used a small number of parameters in a single model that can handle multiple weather elements and tasks. This proposed method applies in a variety of situations that require high-precision image recognition in all weather conditions. Among them are danger detection systems for in-vehicle sensors and security cameras.

The technology has been internationally recognized and presented at the 38th Annual AAAI Conference on Artificial Intelligence (AAAI 2024).

Overview

With the advancement of AI technology, there are an increasing number of situations that require robust image recognition even outdoors, such as in the mobility and infrastructure fields. However, outdoor environments present many uncontrollable variables. In particular, rain, snow and fog can drastically alter the appearance of objects in images captured outdoors. This significantly reduces image recognition accuracy, which is an issue in industrial applications. Research and development of adverse weather removal tasks such as raindrop removal and fog removal (“dehazing”), is actively underway.

Efforts to build AI models (“expert models”) specialized for specific weather conditions and tasks are showing some results. However, in the real world, multiple adverse weather elements may be present at the same time. It required methods capable of more reliable judgment. Therefore, research has been conducted on ensemble models that mix multiple expert models. However, since the number of parameters increases dramatically, no practical model exists in terms of computational complexity.

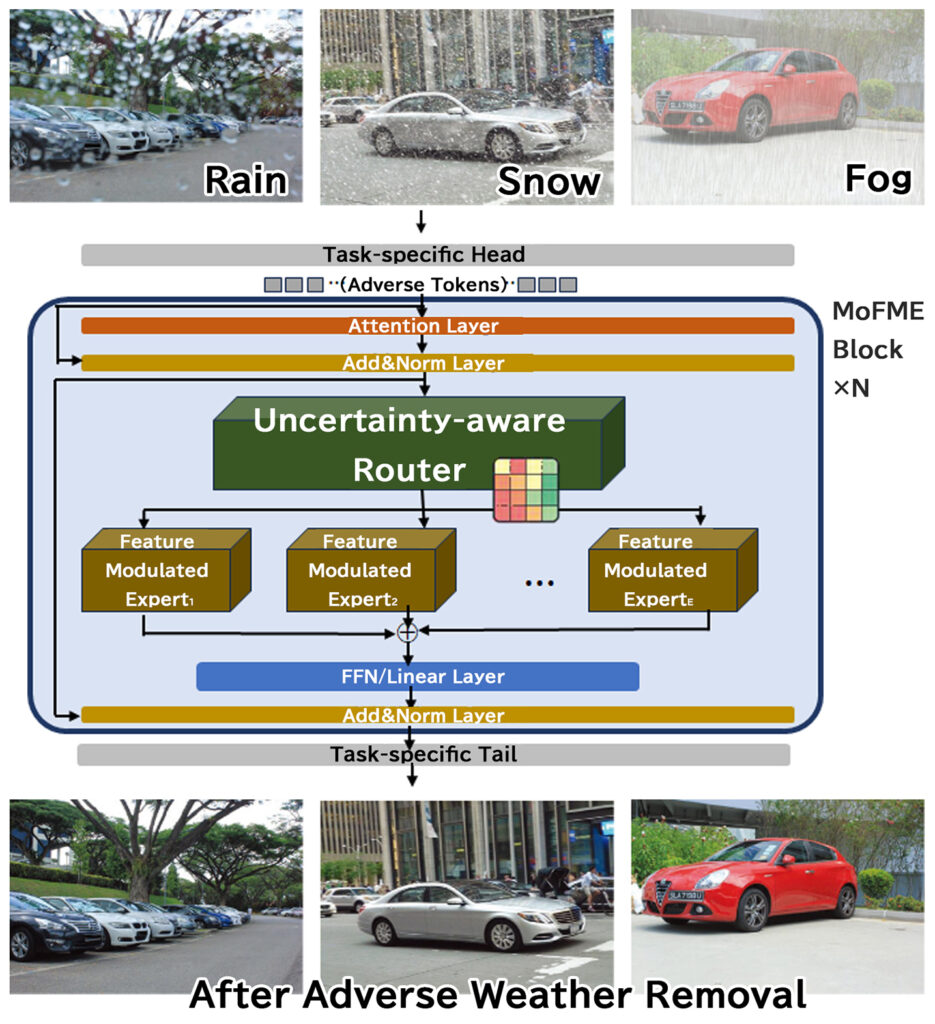

To solve the above issues, the joint development team created “Mixture-of-Feature-Modulation-Experts” (MoFME). This weather removal AI can remove rain, snow, and fog, which degrades image recognition accuracy, with a single ensemble model and with one-third the number of parameters compared to conventional models.

Two new components have been introduced in this AI to accomplish image recognition and segmentation. Previously, they required multiple expert models depending on the weather and task. Now, they involve a single ensemble model and a practical amount of calculation.

First is the “Feature Modulated Expert,” which expresses the parameters of multiple expert models using linear transformation weights. Rather than learning the parameters of different expert models individually, the AI represents them through linear modulation of a specific expert model, reducing the total number of parameters and the amount of calculation.

Second is an “uncertainty-aware router” that switches the contribution of each expert model according to the characteristics of the input image. Each expert model specializes in different weather tasks. If an expert model is not very confident in its weather removal results, it can be told to reduce its contribution, and vice versa. This effectively increases the reliability of the ensemble model and improves image recognition performance.

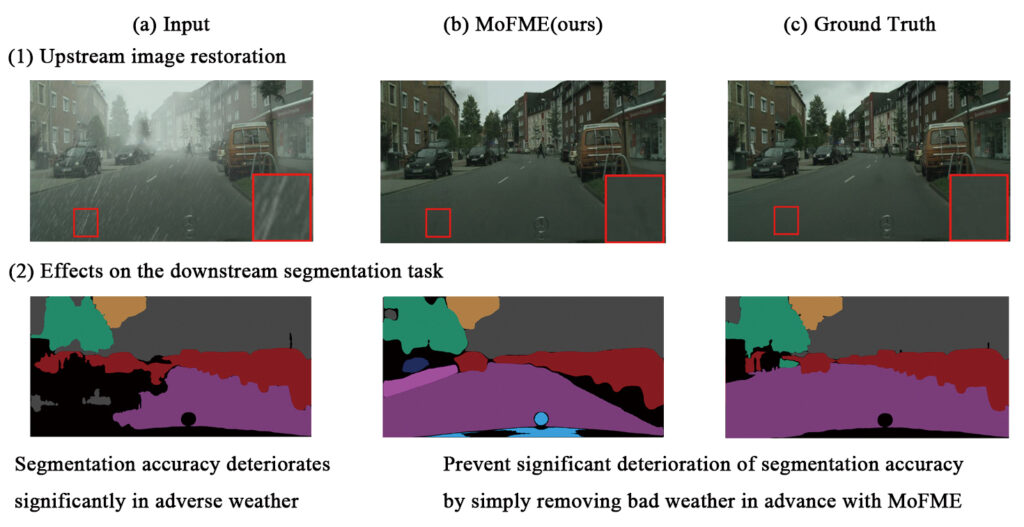

Figure 2 shows the results of adverse weather removal and segmentation using MoFME for the dataset “RainCityscapes.” Specifically, the MoFME method removed both rain and fog, even for a complex image that contained both of these elements, and obtained results equivalent to a clear image. In addition, in the segmentation after removing adverse weather, the adverse weather-removed image preprocessed using the MoFME method achieved the best results in terms of segmentation.

Accordingly, the MoFME method demonstrated image restoration performance that improved recognition accuracy over conventional methods. At the same time, it saved over 72% of parameters and 39% of inference time in image recognition and segmentation tasks for multiple adverse weather images.

Future Outlook

MoFME reduces the impact of rain, snow, fog, and other adverse weather elements Specifically, thes elements can significantly reduce image recognition accuracy, on image recognition AI performance by removing these elements from images. MoFME can cleanly remove rain and fog with one-third the number of parameters compared to conventional methods. Thus, it can be used in situations that require robust image recognition even outdoors, such as in the mobility and infrastructure fields.

Panasonic HD will continue to accelerate the social implementation of AI technology. Additionally, it will promote research and development of AI technology that will help customers in their daily lives and work.

About The Research:

Efficient Deweather Mixture-of-Experts with Uncertainty-aware Feature-wise Linear Modulation https://arxiv.org/abs/2312.16610. This technology was developed under the framework of the “BAIR Open Research Commons*” led by UC Berkeley and is the result of research in which Denis Gudovskiy of Panasonic R&D Center America and Tomoyuki Okuno and Yohei Nakata of Panasonic HD Technology Division participated.

* An AI research institute established as a place for the world’s top researchers to openly collaborate across the boundaries of industry and academia. As of February 2024, 10 companies are participating, including Panasonic HD, Google, and Meta.

-26 March 2024-