ASIA ELECTRONICS INDUSTRYYOUR WINDOW TO SMART MANUFACTURING

How Does AI-Powered Machine Vision Work in Manufacturing?

Since the 1970s, machine vision technology has significantly improved manufacturing processes. Using cameras, sensors, and software, this technology enables machines to perceive their work environment and perform a variety of tasks, from quality control to part positioning, that were previously done manually and often subject to human error.

Recent advancements in artificial intelligence have enhanced machine vision performance and overcome previous limitations, such as costly and time-consuming integration and unreliable execution. Today’s AI-enhanced systems promise increased productivity and flexibility, more accurate measurements, cost savings, and increased safety by minimizing direct human involvement in hazardous areas.

In this blog post, Micropsi explains how new AI-vision technologies work, what benefits they bring, and how you can get started.

What is Machine Vision and How Does it Work?

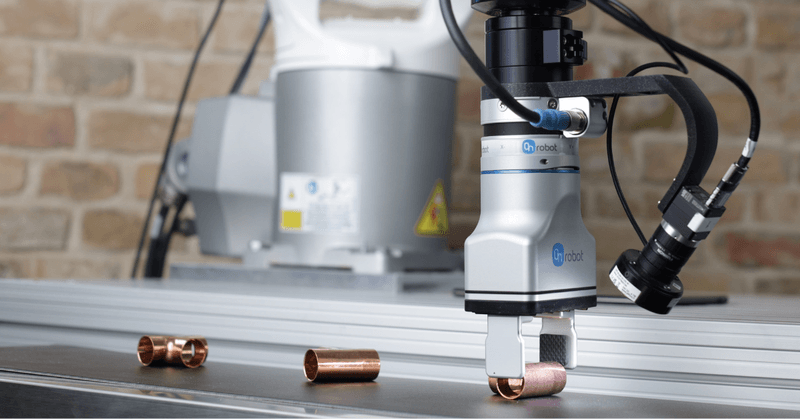

Essentially, machine vision technology enables machines to ‘see’ and make informed decisions based on visual input. Cameras and sensors capture visual data, analyzed by software to perform a range of tasks such as visual inspection, defect detection, positioning and measuring parts, or identifying, sorting, and tracking products. A typical factory setup might feature multiple cameras along a production line, or robotic arms equipped with cameras to augment precision in positioning and handling.

Challenges of Traditional Machine Vision in Manufacturing

Traditional machine vision solutions require a high level of expertise and ideal conditions to capture high-quality data. They often require modifications to the captured data or the physical environment to make the input interpretable to the system. Traditional systems typically rely on comparisons with reference images or CAD data, posing limitations in dynamic or variable conditions.

Let’s consider a real example involving our customer HWL in Germany. At HWL, gear shafts are picked from a washing basket and placed upright on a large, circular, horizontal rack with pegs for heat treatment. Because the rack can be rotated, the pegs are in unpredictable positions. This makes a conventional robotic solution unfeasible. Moreover, the rack is positioned in a factory hall with natural light. As they are made of metal, the shafts reflect the light and have different appearances.

If HWL were to use a traditional vision system to pick and place the gear shafts, a vision expert would need to configure operations on the raw data or physical world to help the system make sense of the situation and reliably locate the gear shafts. This can be achieved by controlling lighting conditions to prevent distortion from reflections or color changes, or by reducing image colors and increasing contrast. Consequently, traditional systems can be limited by changing sunlight conditions, contrast changes, extreme viewing angles, or unexpected objects in the image. As a result, HWL has manually solved this application in the past to ensure reliable execution.

At HWL in Germany, a robot controlled by our MIRAI AI-vision software reliably picks and places reflective gear shafts.

The Transformative Role of Artificial Intelligence

A real game changer in recent years has been the integration of artificial intelligence into machine vision, particularly deep learning technologies. Mimicking neural operations akin to the human brain, these algorithms enhance the accuracy and efficiency of vision systems.

How AI-vision Software Works

Deep learning algorithms utilize neural networks to learn from data and make predictions beyond the input data. This allows them to derive appropriate responses to new scenarios after being shown only a few examples. Neural networks do not rely on predefined visual features or exact scenario replication. Instead, they learn what information is relevant to solving the problem and learn to ignore certain details that change from example to example, such as light brightness, background, or reflections.

These capabilities make AI-vision software more powerful while reducing the amount of human intervention required. Instead of modifying the environment or data, a user can train the neural network with just a few examples. Returning to the HWL example, let’s examine how a robot powered by our MIRAI AI-vision software was trained to solve the pick-and-place application.

With the MIRAI system, the robot’s behavior is derived from the camera’s observations. A user familiarizes the system with the movement it should perform and the variance it may come across, while a camera mounted on the robot records the scene. During the training at HWL, a user demonstrated to the MIRAI-controlled robot what the gear shafts might look like and how to pick and place them.

To do this, the user created different scenarios, such as placing the gear shafts at different angles or exposing them to sunlight, to show the camera a few different scenarios of what the problem might look like. The user repeated this a few times to collect enough data for the neural networks to learn. The AI then computed a skill, a trained task, that generalizes across all the examples it was shown. After a few minutes, this skill was ready to run and today can tell the robot how to localize and pick the gear shafts, no matter the variance it encounters.

Outstanding Performance Through Real-time Operation

Deep learning technology offers another ground-breaking feature: the ability to operate in real time. This is particularly beneficial for dynamic environments, moving components and for ensuring robust execution. At HWL, the rack on which the gear shafts are placed can be rotated and the position of the pegs can change at any time. The MIRAI-controlled robot can navigate to the peg reliably, operating in real-time and continuously correcting its movement for robust performance. The neural network reads an image from the camera stream every 60ms during execution to determine how to move the robot in real-time, accurately reaching the target.

Benefits of AI-powered Machine Vision

The introduction of machine vision has improved productivity, quality control, cost efficiency and safety in the manufacturing industry. Advancements in artificial intelligence and machine learning expand its capabilities and applications, providing critical technology for manufacturers. In the next blog post, we will take a closer look at the key benefits and applications of machine vision in manufacturing.

-14 May 2024-