ASIA ELECTRONICS INDUSTRYYOUR WINDOW TO SMART MANUFACTURING

Samsung Blazes Trail to Memory-Centric Chip Solutions

Memory-centric SoC solutions are what’s coming as a Next Big Thing, as real-time and data-guzzling applications like AI, IoT, and 5G technologies are clamoring for near-zero latency computing resources

Samsung Electronics Co., Ltd comes to the fore of the rising wave.

The world’s largest memory chip maker has unveiled a ground-breaking processing-in-memory, or PIM technology at Hot Chips 33, a leading semiconductor conference.

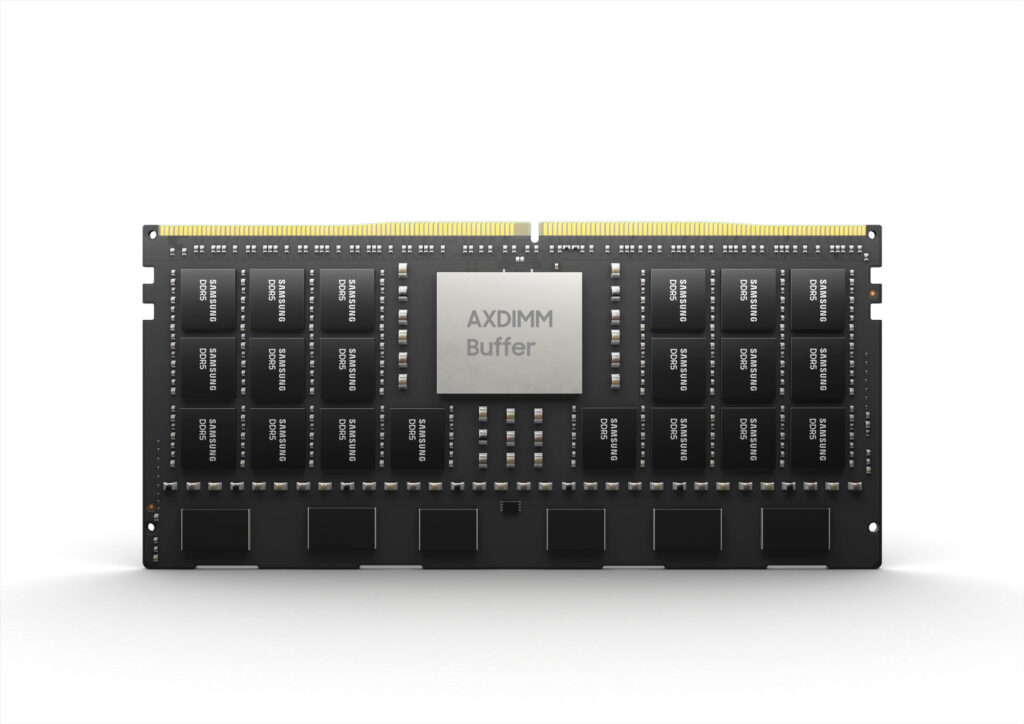

Called as Acceleration DIMM or AXDIMM, the sort of memory-centric chip solution is a module-level integration of an AI accelerator engine with a DIMM-DRAM chip package.

As an AI accelerator chip works inside a DRAM module, the chip module can perform on-chip data processing o computational work, just transferring data between DRAM and AI accelerator engine.

The sync between AI accelerator chip and DRAM on a module level gets rid of a conventional way of transferring back and forth data between DRAM and a host CPU, dramatically recuing data latency and power consumption, while improving performances.

According to Samsung, the PIM chip is now being tested at its customers’ server computing platform, and it reveals that the placement of AI accelerator chip circuitry near DRAM chip helped double performances, while cutting back system power consumption by 40%.

AI accelerator is a sort of hardwired chip that programs AI algorithm on FPGA chip. As it is baked on a silicon wafer, it can process data far faster than a software counterpart.

On-Device AI Chip Solution

Another groundbreaking technology on display is its LPDDR5-PIM for mobile applications- an integration of LPDD5 chip package with an AI accelerator. Called as an on-device AI chip, the mobile PIM has opened the way for smartphones to solely perform AI functions on the chip itself without resorting to a data center for remote AI data processing. The simulation test also shows that it performs two times in terms of voice detection, chatbot, and language translation, while reducing system energy bill by 60%.

These two innovations come on top of Samsung’s earlier successful implementation of HBM-PIM chip that comes built with AI hardware accelerator.

Dubbed as Aquabolt-XL, Samsun’s first HBM-PIM (high-bandwidth memory)-PIM is a sort of system in package chip that integrates AI processing function into Samsung’s HBM2 Aquabolt, to enhance high-speed data processing in supercomputers and AI applications.

The HBM-PIM has since been tested in the Xilinx Virtex Ultrascale+ (Alveo) AI accelerator, where it delivered an almost 2.5X system performance gain as well as more than a 60% cut in energy consumption.

“HBM-PIM is the industry’s first AI-tailored memory solution being tested in customer AI-accelerator systems, demonstrating tremendous commercial potential,” said Nam Sung Kim, senior vice president of DRAM Product & Technology at Samsung Electronics.

“Through standardization of the technology, applications will become numerous, expanding into HBM3 for next-generation supercomputers and AI applications, and even into mobile memory for on-device AI as well as for memory modules used in data centers.”

“Xilinx has been collaborating with Samsung Electronics to enable high-performance solutions for data center, networking and real-time signal processing applications starting with the Virtex UltraScale+ HBM family, and recently introduced our new and exciting Versal HBM series products,” said Arun Varadarajan Rajagopal, senior director, Product Planning at Xilinx, Inc.